The following is an account of my embarrassing ignorance on color spaces, gamma correction, and pretty-much everything to do with how a computer outputs things to a monitor.

Recently I've been kicking around an idea for probably the greatest retro-video-game game of all time. I'm 40% sure it will be amazing and spawn a whole new genre of games. I'm also 60% sure it will be not as fun as I imagine, so I thought I'd better test it out first.

As part of prototyping I made some placeholder models in Blender. I added textures to a couple of the models, but - in the interests of saving time - assigned simple solid colors for the rest.

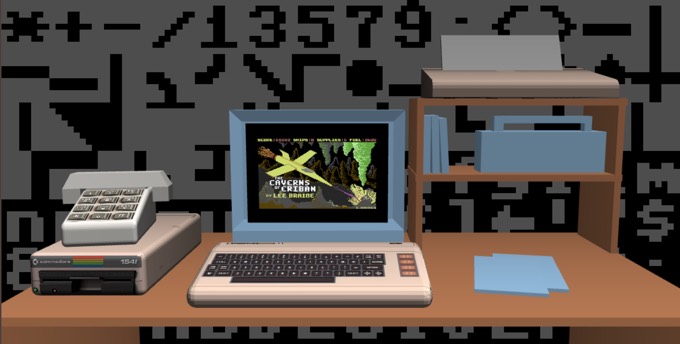

Next I exported the file as an .obj file and wrote a parser to read the vertex data and materials. These could then be displayed in the browser using WebGL2, as seen in the screen shot below.

Everything (except for the crazy PETSCII background and the C64 computer display) is rendered using a single vertex shader and a single fragment shader.

With the scene-renderer working ~fine~ (well, considering the limited amount of work I put into the shaders) it was time to move on to the game logic... but something was bugging me.

The models with textures looked good enough, but the ones with the "solid color" materials looked darker, and a completely different hue from the Blender source. It really didn't matter, because I was going to texture everything anyway. So who cares hey? Hey?

I bet many of you know where this is going. I bet I should have known too.

Where did things go wrong?

At first I thought the lighting was just too dark - but making it brighter doesn't fix the hue shift. To simplify things (and figure out where the problem was coming from), I rendered everything "flat" without shadows. Then I could color-pick the output to compare it with the values in the material data.

Oddly, the WebGL rendering had very similar RGB values to those in the Blender file (and the exported material data): but the colors were obviously quite different. Even stranger, it was the WebGL version that looked correct while the Blender version looked wrong: an RGB value of (0.45,0.18,0.09) does look like "Mocha". If I color-picked Blender's Base Color, the result was a much brighter (0.71, 0.46, 0.30) (Aka "Santa Fe") rather than its purported values.

A sinking feeling

So at this stage the answer was obvious: my very simple model renderer was correct and Blender 2.9 was wrong and broken. Hmm, that sounds... extremely unlikely. A feeling of dread starts to descend. Is this some kind of color space something-something issue?! Uh oh.

This sounds like a rabbit hole.

I know colors are hard, but I've been able to hide from their complexities for so many years - and was hoping to continue hiding.

"It's just a quick prototype, get on with the game logic!" I mumbled to myself as I started thinking about things I associate with term "color space": those annoying monitor calibration apps, that dialog box I ignore when photoshop starts, gamma levels... Wait, could this be a gamma issue? Am I supposed to do my own gamma correction in WebGL?

I spelunked through the WebGL mailing list archives and found an old post that began: "Gamma correction is a tricky business.". The feeling of dread swells...

...But then the post continues:

The equation for gamma is Vout = pow ( Vin, gamma ) ; ...where gamma is around 1/2.2 for CRT's and CRT-emulators such as LCD's and plasma displays.

Hey, a chance for a quick fix and to put off learning about color spaces! Let's test it out! Applying the gamma correction to my fragment shader (where color is a vec3 RGB color):

float gamma = 1.0 / 2.2;

out_color = vec4(pow(color, vec3(gamma)), 1.0);

Oh my, that was it! The result looks much closer to the original colors in Blender, and the contrast is much nicer. Blender is obviously doing it's own gamma-correction (note to self: go find the code), and the gamma exponent value looks pretty close to 1/2.2. I'm sure it's far more complicated than that, but hey... the colors match!

Gamma-correction and images

So, all's well that ends well - back to game dev then? Nope. That's not how rabbit holes work.

My scene has both textured models and non-textured models. The textures were fine before I added the gamma correction. I needed to explicitly not apply the code above to any textures else they became over-bright and washed out. But then, how was Blender doing it?

Looking at the shading nodes in my Blender scene - where the image texture is connected to the Base color - there is a dropdown called "Color Space" that defaults to "sRGB". If I change that to "Linear" the image is washed out and bright like my corrected version. The texture images are already gamma corrected.

The right way to do it? Learning it properly?

The proper thing to do at this point is to sit down and do some research. Learn how color spaces are used, and how they should be integrated into my WebGL workflow. But then I wouldn't be making my game. So I'm going to punt on this one - but it will be a short punt. I know my nascent ignorance will come back to bight me soon.

One thing I do know is that it's not enough to just "not apply gamma correction to the image textures". As the mailing-list post makes clear: "pow ( Vin * light, gamma ) != pow ( Vin, gamma ) * light". You need to do all lighting calculations on non-corrected inputs.

My thinking is that I should bake the textures from blender out in linear space (with gamma = 1.0, exposure=0.0). Then they should be lit, have fog applied etc in my shader, and only finally have the gamma=2.2 applied to it at the end of the shader.

But I don't think that's all either. In all the examples of textured models I've seen over the year, none ever look like the texture images are too dark with bad contrast... So I'm not sure.

For now I'll just continue hiding from color spaces.